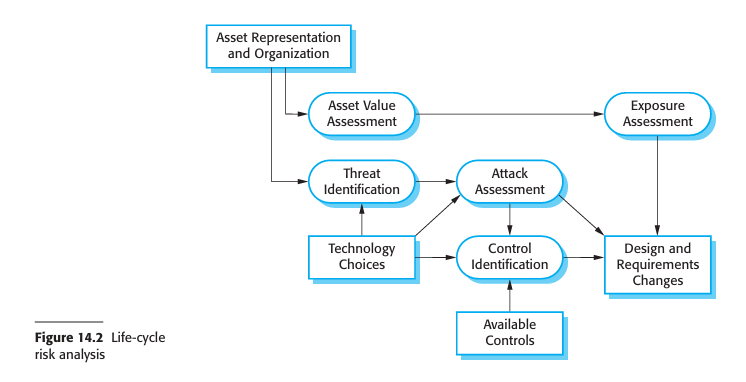

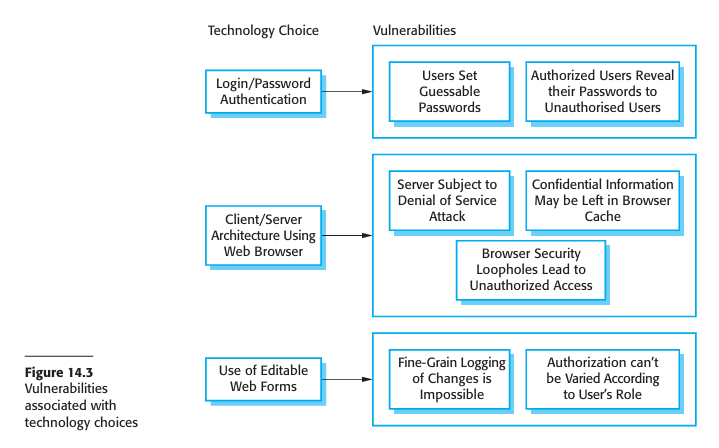

It is generally true that it is very difficult to add security to a system after it has been implemented. Therefore, you need to take security issues into account during the systems design process. In this section, I focus primarily on issues of system design, because this topic isn't given the attention it deserves in computer security books. Implementation issues and mistakes also have a major impact on security but these are often dependent on the specific technology used.

Here, I focus on a number of general, application-independent issues relevant to secure systems design:

1. Architectural design—how do architectural design decisions affect the security of a system?

2. Good practice—what is accepted good practice when designing secure systems?

3. Design for deployment—what support should be designed into systems to avoid the introduction of vulnerabilities when a system is deployed for use?

Beyond the basics, security design must be tailored to an application's specific purpose, criticality, and environment. For example, a military system requires a different approach to data classification (like "secret" or "top secret") than a system handling personal data, which must adhere to data protection laws.

Security, Dependability, and Compromises

There's a strong link between security and dependability. Strategies used for dependability, such as redundancy and diversity, can also help a system resist and recover from attacks. Similarly, high-availability mechanisms can aid in recovering from denial-of-service (DoS) attacks.

Designing for security always involves compromises, especially between security, performance, and usability. Implementing strong security measures, such as encryption, can impact a system's performance by slowing down processes. There is also a tension with usability, as security features like multiple passwords can be inconvenient and lead to users being locked out. The ideal balance among these factors depends on the system's type and its operational environment. For instance, a military system's users are accustomed to rigorous security, whereas a stock trading system requires speed and would find frequent security checks unacceptable.

14.2.1 Architectural design

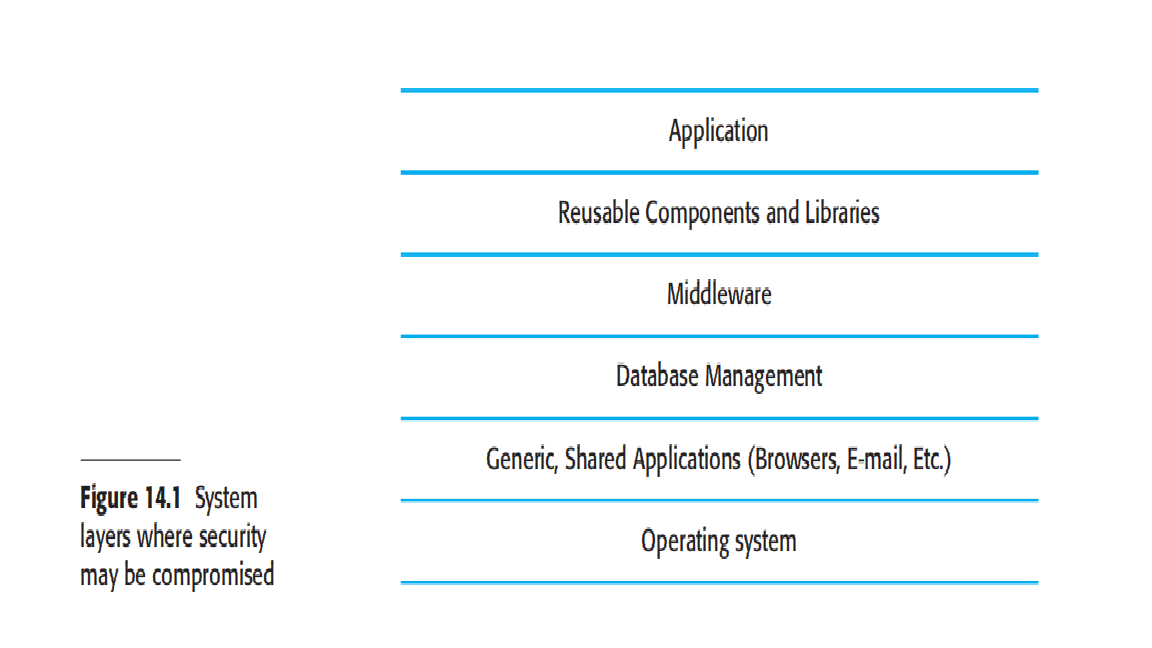

As I have discussed in Chapter 11, the choice of software architecture can have profound effects on the emergent properties of a system. If an inappropriate architecture is used, it may be very difficult to maintain the confidentiality and integrity of information in the system or to guarantee a required level of system availability. In designing a system architecture that maintains security, you need to consider two fundamental issues:

Protection—how should the system be organized so that critical assets can be protected against external attack?

Distribution—how should system assets be distributed so that the effects of a successful attack are minimized?

These issues are potentially conflicting. If you put all your assets in one place, then you can build layers of protection around them. As you only have to build a single protection system, you may be able to afford a strong system with several protection layers. However, if that protection fails, then all your assets are compromised. Adding several layers of protection also affects the usability of a system so it may mean that it is more difficult to meet system usability and performance requirements.

On the other hand, if you distribute assets, they are more expensive to protect because protection systems have to be implemented for each copy. Typically, then, you cannot afford as many protection layers. The chances are greater that the protection will be breached. However, if this happens, you don't suffer a total loss. It may be possible to duplicate and distribute information assets so that if one copy is corrupted or inaccessible, then the other copy can be used. However, if the information is confidential, keeping additional copies increases the risk that an intruder will gain access to this information.

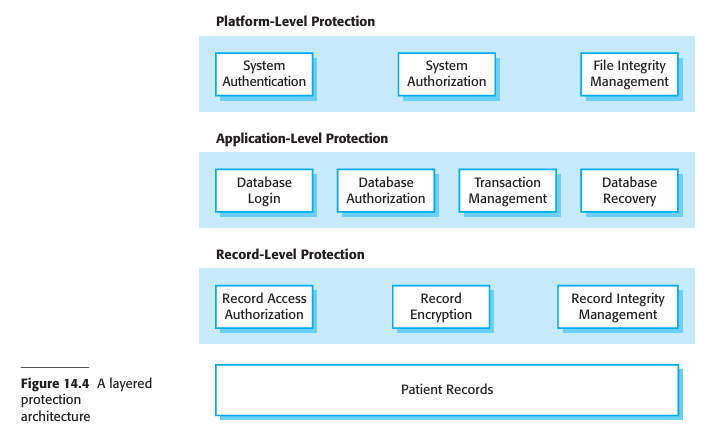

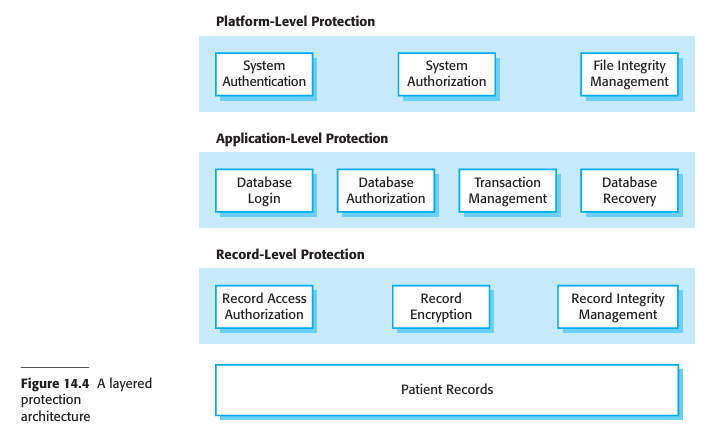

For the patient record system, it is appropriate to use a centralized database architecture. To provide protection, you use a layered architecture with the critical protected assets at the lowest level in the system, with various layers of protection around them. Figure 14.4 illustrates this for the patient record system in which the critical assets to be protected are the records of individual patients.

In order to access and modify patient records, an attacker has to penetrate three system layers:

Platform-level protection- The top level controls access to the platform on which the patient record system runs. This usually involves a user signing on to a particular computer. The platform will also normally include support for maintaining the integrity of files on the system, backups, etc.

Application-level protection- The next protection level is built into the application itself. It involves a user accessing the application, being authenticated, and getting authorization to take actions such as viewing or modifying data. Application-specific integrity management support may be available.

Record-level protection- This level is invoked when access to specific records is required, and involves checking that a user is authorized to carry out the requested operations on that record. Protection at this level might also involve encryption to ensure that records cannot be browsed using a file browser. Integrity checking using, for example, cryptographic checksums, can detect changes that have been made outside the normal record update mechanisms.

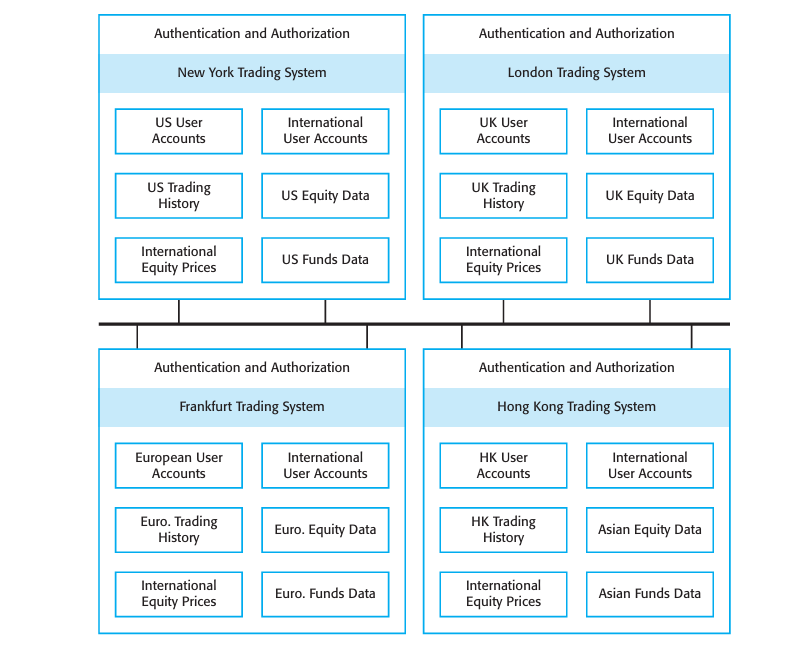

The number of security layers depends on data criticality, but balancing security with usability is key, as multiple passwords can be irritating for users. A client-server architecture is effective for critical data, but a successful attack could lead to high recovery costs and make the system vulnerable to denial-of-service (DoS) attacks. For systems where DoS attacks are a major risk, a distributed object architecture is better. This approach spreads system assets across multiple platforms with individual protection mechanisms, allowing some services to remain operational even if one node is attacked. For instance, a distributed banking system can replicate critical data across nodes so that trading can continue even if a specific market's node goes down.

Finally, there's an inherent tension between security and performance. The architecture that best meets security requirements often conflicts with performance needs. For example, a layered security approach for a large database ensures confidentiality but adds communication overhead, which slows down data access. Designers must discuss these trade-offs with clients to find an acceptable balance.

14.2.2 Design guidelines

There are no universal rules for achieving system security, as the required measures depend on the system's type and the users' attitudes. For instance, bank employees will accept more stringent security procedures than university staff. However, some general guidelines can be widely applied to good security design practices. These guidelines are valuable for two main reasons:

1. They raise awareness of security issues: Software engineers often prioritize immediate goals like getting the software to work, which can cause them to overlook security. These guidelines help ensure that security is considered during critical design decisions.

2. They serve as a review checklist: The guidelines can be used in the system validation process to create specific questions that check how security has been engineered into the system.

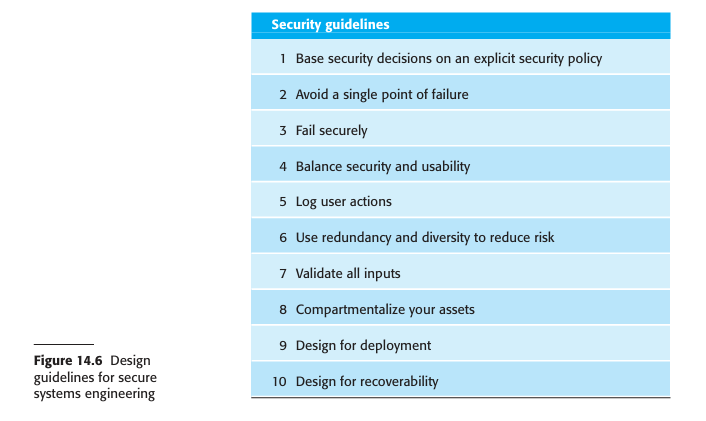

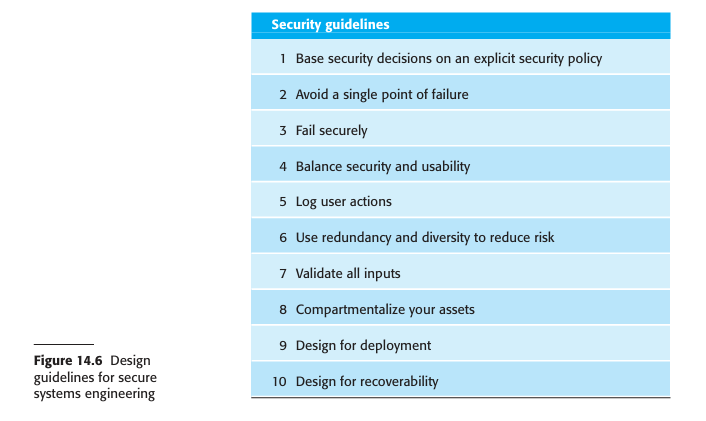

The 10 design guidelines summarized in Figure 14.6 are derived from various sources and focus on the software specification and design phases. Other general principles like "Secure the weakest link" and "Keep it simple" are also important but are less directly tied to the engineering decisions discussed here.

|

Security Guidelines

|

|

| 1. Base security decisions on an explicit security policy |

All security decisions should be guided by a high-level organizational security policy that defines what security means for the company. This policy should be a framework for design, not a list of specific mechanisms. If no formal policy exists, designers should work with management to create one to ensure consistent and approved decisions.

|

| 2. Avoid a single point of failure |

Implement multiple, layered defenses ("defense in depth") so the system doesn’t rely on just one measure. For example, use both passwords and challenge/response systems, or maintain logs and backups for data integrity.

|

| 3. Fail securely |

Ensure that when systems fail, they do not compromise security. For instance, encrypt local data left after a server failure to maintain confidentiality.

|

| 4. Balance security and usability |

Overly strict security can frustrate users and encourage insecure workarounds. Find a practical balance to maintain both protection and usability.

|

| 5. Log user actions |

Keep detailed logs of who did what, when, and where. Logs help recover from failures and trace attacks, though they should be secured against tampering.

|

| 6. Use redundancy and diversity to reduce risk |

Maintain multiple, diverse versions of software or data (e.g., different OSs). This prevents one vulnerability from compromising all systems.

|

| 7. Validate all inputs |

Never trust user input. Validate and sanitize all data to prevent buffer overflows, SQL injection, and other injection-based attacks.

|

| 8. Compartmentalize your assets |

Separate system assets so users can only access what they need. This limits the damage from a compromised account, while emergency overrides must be logged.

|

| 9. Design for deployment |

Make the system easy and secure to configure. Automate checks for configuration errors to reduce risks introduced during deployment.

|

| 10. Design for recoverability |

Assume breaches will happen and plan recovery. Have backups, alternate authentication systems, and secure procedures for restoring a trusted state.

|

14.2.3 Design for deployment

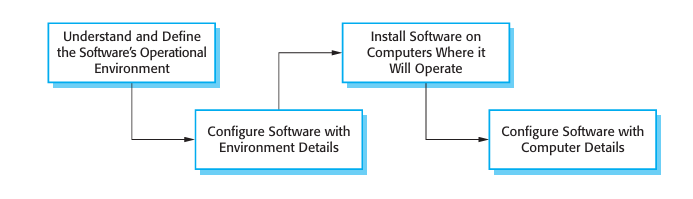

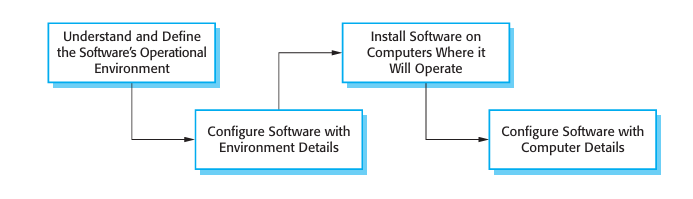

The deployment of a system involves configuring the software to operate in an operational environment, installing the system on the computers in that environment, and then configuring the installed system for these computers (Figure 14.7). Configuration may be a simple process that involves setting some built-in parameters in the software to reflect user preferences. Sometimes, however, configuration is complex and requires the specific definition of business models and rules that affect the execution of the software.

It is at this stage of the software process that vulnerabilities in the software are often accidentally introduced. For example, during installation, software often has to be configured with a list of allowed users. When delivered, this list simply consists of a generic administrator login such as 'admin' and a default password, such as 'password'. This makes it easy for an administrator to set up the system. Their first action should be to introduce a new login name and password, and to delete the generic login name. However, it's easy to forget to do this. An attacker who knows of the default login may then be able to gain privileged access to the system. Configuration and deployment are often seen as system administration issues and so are considered to be outside the scope of software engineering processes.

Certainly, good management practice can avoid many security problems that arise from configuration and deployment mistakes. However, software designers have the responsibility to 'design for deployment'. You should always provide built-in support for deployment that will reduce the probability that system administrators (or users) will make mistakes when configuring the software.

I recommend four ways to incorporate deployment support in a system:

1. Include support for viewing and analyzing configurations

You should always include facilities in a system that allow administrators or permitted users to examine the current configuration of the system. This facility is, surprisingly, lacking from most software systems and users are frustrated by the difficulties of finding configuration settings. For example, in the version of the word processor that I used to write this chapter, it is impossible to see or print the settings of all system preferences on a single screen. However, if an administrator can get a complete picture of a configuration, they are more likely to spot errors and omissions. Ideally, a configuration display should also highlight aspects of the configuration that are potentially unsafe—for example, if a password has not been set up.

2. Minimize default privileges

You should design software so that the default configuration of a system provides minimum essential privileges. This way, the damage that any attacker can do can be limited. For example, the default system administrator authentication should only allow access to a program that enables an administrator to set up new credentials. It should not allow access to any other system facilities. Once the new credentials have been set up, the default login and password should be deleted automatically.

3. Localize configuration settings

When designing system configuration support, you should ensure that everything in a configuration that affects the same part of a system is set up in the same place. To use the word processor example again, in the version that I use, I can set up some security information, such as a password to control access to the document, using the Preferences/Security menu. Other information is set up in the Tools/Protect Document menu. If configuration information is not localized, it is easy to forget to set it up or, in some cases, not even be aware that some security facilities are included in the system.

4. Provide easy ways to fix security vulnerabilities

You should include straightforward mechanisms for updating the system to repair security vulnerabilities that have been discovered. These could include automatic checking for security updates, or downloading of these updates as soon as they are available. It is important that users cannot bypass these mechanisms as, inevitably, they will consider other work to be more important. There are several recorded examples of major security problems that arose (e.g., complete failure of a hospital network) because users did not update their software when asked to do so.